Table of Contents

Fault Tolerance and Load Balancing

This page contains strategies, examples and notes on achieving high availability with VuFind®.

High-Availability Strategies

Load-Balancing

Load-balancing distributes requests across multiple servers, or VuFind® nodes. If configured correctly, a load-balanced configuration can provide a degree of high-availability, enabling per-node downtime or maintenance.

If possible, load balancers should be redundant themselves. Many vendor load balancers (F5, Barracuda, Kemp, etc.) and open-source solutions (Zen, HAProxy, etc.) have documented solutions to achieve high availability.

It may be noted that many installations separate the VuFind® front-end (Apache, MySQL, etc.) from the Solr back-end in order to minimize the risk associated with downtime on a particular server and keep service management concepts simple.

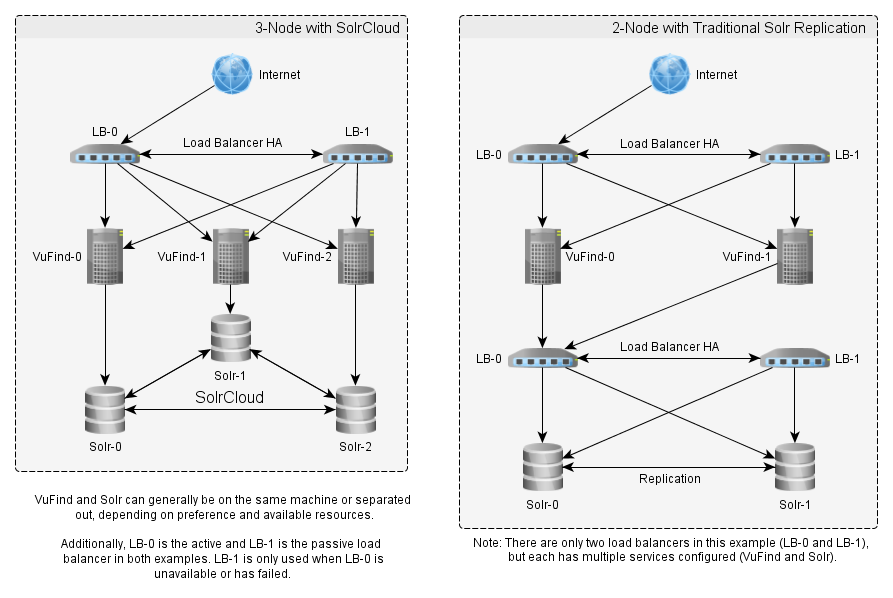

Below are two example configurations:

Front-End Sessions

When configuring a load-balancer, it's important to keep in mind how user sessions will be affected. If a user logs in, they might only have a session with one of the many nodes that can serve requests, meaning they may have to log in multiple times while trying to use VuFind® (a bad thing).

There are two primary strategies for dealing with this issue:

Node Persistence

Most load-balancers can be configured such that a user will always hit the same node during their session. This is often referred to as sticky sessions. The strategies for achieving this goal depend on the load balancer but can range from using cookies to keeping track of IP addresses. Consult the documentation for the load balancer to learn about the strategies available.

The issue with this strategy is that if a front-end node goes down, all the users using that node will be kicked off and will need to re-authenticate when served by another node. Additionally, load may not be ideally distributed across the nodes, depending on the strategy used.

Session Distribution

If sessions are stored inside a local database instance on a node (MySQL/MariaDB/etc.), then replication or clustering technologies for the database can be leveraged to synchronize session information across nodes, making node persistence unnecessary. Consult the documentation of your chosen database software for information on setting up replication.

- MariaDB Galera Cluster: https://mariadb.com/kb/en/mariadb/galera-cluster/

Solr Replication

There are two primary options for keeping multiple Solr nodes synchronized:

- SolrCloud: https://cwiki.apache.org/confluence/display/solr/SolrCloud (more complex, but more reliable)

- Traditional Replication: Solr Replication (less complex, but less flexible)

Example Configuration

This example is by no means complete, but it gives some hints and guidelines for creating a fault tolerant and load balanced VuFind® service. Configuration of a load balancer is out of the scope of this document. A hardware load-balancer could be used as well as a software-based solution such as HAProxy.

There are of course many ways to improve fault tolerance of a service, but this example describes one way to implement a fault-tolerant and load balanced VuFind®. The basic idea is to replicate a single VuFind® server into at least three separate servers. Three is an important number in that it allows clustered services to always have a successful vote and understanding of the leader etc. This is often called having a quorum. It aims to avoid the so-called split-brain situation where two groups of servers are having trouble communicating with each other but continue to serve users' requests. Having at least three servers also allows one to fail without the total capacity suffering as much as with only two servers.

While it's easy to replicate VuFind®'s PHP-based front-end on multiple servers, more effort is required to replicate also the most important underlying services, MySQL/MariaDB database and Solr index.

Required Software Components

All of the components below will have an instance running on each server node.

- VuFind®, of course

- MariaDB Galera Cluster

- This replaces the standard MySQL or MariaDB installation and allows VuFind® to store searches, sessions, user data etc. in a consistent way on any of the nodes.

- See https://mariadb.com/kb/en/mariadb/getting-started-with-mariadb-galera-cluster/ for instructions on how to get started with a database cluster.

- SolrCloud

- This replaces the single Solr instance VuFind® comes with.

- See https://cwiki.apache.org/confluence/display/solr/SolrCloud for information on setting up SolrCloud.

- There's also a stand-alone Solr 5.x installation maintained by the National Library of Finland with a VuFind®-compatible schema. It has some custom fields but could be used as a base for the SolrCloud nodes. See https://github.com/NatLibFi/NDL-VuFind-Solr for more information.

Load Balancer and Apache Setup

To enable the front-end servers' Apaches to get the user's original IP address, configure the load balancer to set the X-Forwarded-For header and install the mod_rpaf extension to Apache. We use the following configuration:

LoadModule rpaf_module modules/mod_rpaf.so RPAF_Enable On RPAF_ProxyIPs [proxy IP address] RPAF_Header X-Forwarded-For RPAF_SetHostName On RPAF_SetHTTPS On RPAF_SetPort On

VuFind® Setup

Here it is assumed that the MariaDB Galera Cluster and SolrCloud are already up and running on each node. Basic setup of VuFind® is described elsewhere, and it is recommended to test it out on a single node first to see that everything works properly. The servers are assumed to have IP addresses from 10.0.0.1 to 10.0.0.3.

- Configure VuFind® to connect to the MariaDB node on localhost.

- Configure VuFind® to connect to all the SolrCloud nodes by specifying their addresses as an array in config.ini like this so that it will automatically try other Solr nodes if the local one is unavailable:

[Index] url[] = http://10.0.0.1:port/solr url[] = http://10.0.0.2:port/solr url[] = http://10.0.0.3:port/solr

- Configure VuFind® to use database-stored sessions in config.ini as this allows the sessions to be available on all nodes so that no sticky sessions are required.:

[Session] type = Database

- Set up the load balancer to use a health probe to check server status from VuFind® so that it knows whether VuFind® is available. From version 2.5 VuFind® has an API that can be used to check the status at http://10.0.0.x/AJAX/SystemStatus. It returns a simple string “OK” with status code 200 if everything is working properly. Otherwise it returns a message describing the issue starting with “ERROR”.

- Set up scheduled tasks like removal of expired searches to run on only one of the servers.

- Set up AlphaBrowse index creation (if use use it) to run on ALL servers. AlphaBrowse index files are not automatically distributed to other nodes by Solr.

Special Considerations

Static File Timestamps

It is important to make sure all the static files served by VuFind® have identical timestamps on all the servers. There are multiple ways to achieve this, such as:

- Deploy the files using a .zip package so that timestamps are preserved.

- Deploy from a git repository and use git-restore-mtime-core to set timestamps to the latest commit.

- Use the touch command to set all timestamps to a manually defined moment.

In any case, make sure the timestamps are advanced if a file is changed.

Apache ETag

ETag is used between a web server and a browser in addition to the last modification time to check if a file has been changed. The server sends an ETag header along the file, and every time the browser requests the file from the server it includes the ETag in the request. If the tags match (and the file is not newer than If-Modified-Since header in the request), the file is returned from the server. Otherwise the server can optimize the transfer by returning just a 304 “Not Modified” status code without returning the whole file.

By default Apache uses the inode timestamp in addition to file's modification time when creating the ETag, which will cause trouble with caching when the file may be served from any of three servers. Therefore it's important to configure Apache to use only the file modification time and size with the following directive:

FileETag MTime Size

Now the ETag of a file will be identical on all servers as long as the modification time and file size are identical.

Asset Pipeline and other shared files

Load balancing and VuFind®'s asset pipeline are currently not compatible. The problem is that the request for which the assets were created could be served by a different server than the one the client requests them from. Without software modifications there are at least the following possibilities:

- Use a shared disk for all the load-balanced servers. This might have performance and reliability implications.

- Use sticky session in the load balancer. This has its own downsides like causing future requests from clients to go to the same server as before, which could cause imbalance between the servers especially when new ones are added.

Note that the above issues also affect things like the cover cache, but since covers can always be recreated from the source, it does not cause actual issues with servicing the requests.

Additional Implementation Notes

- If you use Shibboleth, configure it to use ODBC to store its data so that it's available on all the server nodes. If you're running RHEL/CentOS 6.x, check out https://issues.shibboleth.net/jira/browse/SSPCPP-473 first.

- At the time of writing this it's not recommended to run Piwik in a load balancer environment like this. A [[http://piwik.org/faq/new-to-piwik/faq_134/|Piwik FAQ entry] suggests using a single database server. In any case statistics tracking could be considered less critical so it could reside on a single server.